Practical MongoDB Aggregations

Author: Paul Done (@TheDonester)

Version: 7.00

Last updated: July 2023 (see Book Version History)

MongoDB versions supported: 4.2 → 7.0

Book published at: www.practical-mongodb-aggregations.com

Content created & assembled at: github.com/pkdone/practical-mongodb-aggregations-book

Acknowledgements - many thanks to the following people for their valuable feedback:

- Jake McInteer

- John Page

- Asya Kamsky

- Mat Keep

- Brian Leonard

- Marcus Eagan

- Elle Shwer

- Ethan Steininger

- Andrew Morgan

Front cover image adapted from a Photo by Henry & Co. from Pexels under the Pexels License (free to use & modify)

This work is licensed under a Creative Commons Attribution-NonCommercial-ShareAlike 3.0 License

Copyright © 2021-2023 Paul Done

ADVERT: Hardcopy Available To Purchase

As part of the official MongoDB Inc. series of books, titled MongoDB Press, a version of this book is published in both paper and downloadable formats by Packt. The Packt versions of the book include additional information in each chapter, as well as two extra example chapters.

You can purchase the book from Packt, Amazon, and other book retailers. Be sure to use the discount code shown on the MongoDB Press page.

Foreword

By Asya Kamsky (@asya999)

I've been involved with databases since the early 1990's when I "accidentally" got a job with a small database company. For the next two decades, databases were synonymous with SQL in my mind, until someone asked me what I thought about these new "No SQL" databases, and MongoDB in particular. I tried MongoDB for a small project I was doing on the side, and the rest, as they say, is history.

When I joined the company that created MongoDB in early 2012, the query language was simple and straightforward but didn't include options for easy data aggregation, because the general advice was "store the data the way you expect to access the data", which was a fantastic approach for fast point queries. As time went on, though, it became clear that sometimes you want to answer questions that you didn't know you'd have when you were first designing the application, and the options for that within the database itself were limited. Map-Reduce was complicated to understand and get right, and required writing and running JavaScript, which was inefficient. This led to a new way to aggregate data natively in the server, which was called "The Aggregation Framework". Since the stages of data processes were organized as a pipeline (familiarly evoking processing files on the Unix command line, for those of us who did such things a lot) we also referred to it as "The Aggregation Pipeline". Very quickly "Agg" became my favorite feature for its flexibility, power and ease of debugging.

We've come a long away in the last nine years, starting with just seven stages and three dozen expressions operating on a single collection, to where we are now: over thirty stages, including special stages providing input to the pipeline, allowing powerful output from the pipeline, including data from other collections in a pipeline, and over one hundred and fifty expressions, available not just in the aggregation command but also in queries and updates.

The nature of data is such that we will never know up-front all the questions we will have about it in the future, so being able to construct complex queries (aka aggregations) about it is critical to success. While complex data processing can be performed in any programming language you are comfortable with, being able to analyze your data without having to move it from where it's currently stored provides a tremendous advantage over exporting and loading the data elsewhere just to be able to use it for your analytics.

For years, I've given talks about the power of the Aggregation Pipeline, answered questions from users about how to do complex analysis with it, and frequently fielded requests for a comprehensive "Aggregation Cookbook". Of course it would be great to have a repository of "recipes" with which to solve common data tasks that involve more than a single stage or expression combination, but it's hard to find the time to sit down and write something like that. This is why I was so stoked to see that my colleague, Paul Done, had just written this book and laid the foundation for that cookbook.

I hope you find this collection of suggestions, general principles, and specific pipeline examples useful in your own application development and I look forward to seeing it grow over time to become the cookbook that will help everyone realize the full power of their data.

Who This Book Is For

This book is for developers, architects, data analysts, data engineers and data scientists who have some familiarity with MongoDB and have already acquired a small amount of rudimentary experience using the MongoDB Aggregation Framework. If you do not yet have this entry level knowledge, don't worry because there are plenty of getting started guides out there. If you've never used the Aggregation Framework before, you should start with one or more of the following resources before using this book:

- The MongoDB Manual, and specifically its Aggregation section

- The MongoDB University free online courses, and specifically the MongoDB Aggregation introduction course

- The MongoDB: The Definitive Guide book by Bradshaw, Brazil & Chodorow, and specifically its section 7. Introduction to the Aggregation Framework

This book is not for complete novices, explaining how you should get started on your first MongoDB aggregation pipeline. Neither is this book a comprehensive programming language guide detailing every nuance of the Aggregation Framework and its syntax. This book intends to assist you with two key aspects:

- Providing a set of opinionated yet easy to digest principles and approaches for increasing your effectiveness in using the Aggregation Framework

- Providing a set of examples for building aggregation pipelines to solve various types of data manipulation and analysis challenges

Introduction

This introduction section of the book helps you understand what MongoDB Aggregations are, the philosophy of Aggregations, what people use them for, and the history of the framework.

Introducing MongoDB Aggregations

What Is MongoDB’s Aggregation Framework?

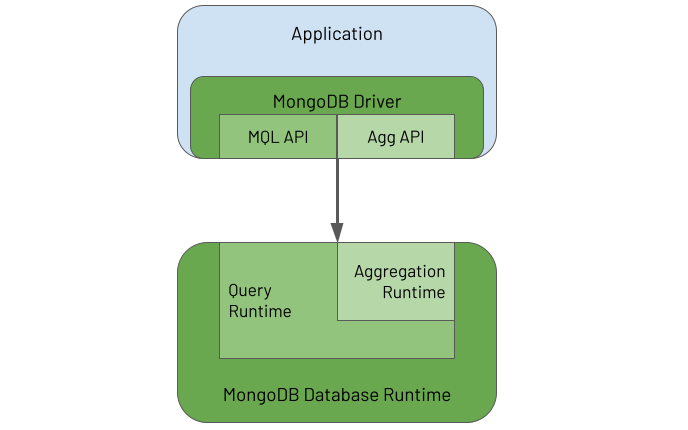

MongoDB’s Aggregation Framework enables users to send an analytics or data processing workload, written using an aggregation language, to the database to execute the workload against the data it holds. You can think of the Aggregation Framework as having two parts:

-

The Aggregations API provided by the MongoDB Driver embedded in each application to enable the application to define an aggregation task called a pipeline and send it to the database for the database to process

-

The Aggregation Runtime running in the database to receive the pipeline request from the application and execute the pipeline against the persisted data

The following diagram illustrates these two elements and their inter-relationship:

The driver provides APIs to enable an application to use both the MongoDB Query Language (MQL) and the Aggregation Framework. In the database, the Aggregation Runtime reuses the Query Runtime to efficiently execute the query part of an aggregation workload that typically appears at the start of an aggregation pipeline.

What Is MongoDB's Aggregations Language?

MongoDB's aggregation pipeline language is somewhat of a paradox. It can appear daunting, yet it is straightforward. It can seem verbose, yet it is lean and to the point. It is Turing complete and able to solve any business problem *. Conversely, it is a strongly opinionated Domain Specific Language (DSL), where, if you attempt to veer away from its core purpose of mass data manipulation, it will try its best to resist you.

* As John Page once showed, you can even code a Bitcoin miner using MongoDB aggregations, not that he (or hopefully anyone for that matter) would ever recommend you do this for real, for both the sake of your bank balance and the environment!

Invariably, for beginners, the Aggregation Framework seems difficult to understand and comes with an initially steep learning curve that you must overcome to become productive. In some programming languages, you only need to master a small set of the language's aspects to be largely effective. With MongoDB aggregations, the initial effort you must invest is slightly greater. However, once mastered, users find it provides an elegant, natural and efficient solution to breaking down a complex set of data manipulations into a series of simple easy to understand steps. This is the point when users achieve the Zen of MongoDB Aggregations, and it is a lovely place to be.

MongoDB's aggregation pipeline language is focused on data-oriented problem-solving rather than business process problem-solving. Depending on how you squint, it can be regarded as a functional programming language rather than a procedural programming language. Why? Well, an aggregation pipeline is an ordered series of statements, called stages, where the entire output of one stage forms the entire input of the next stage, and so on, with no side effects. This functional nature is probably why many users regard the Aggregation Framework as having a steeper learning curve than many languages. Not because it is inherently more difficult to understand but because most developers come from a procedural programming background and not a functional one. Most developers also have to learn how to think like a functional programmer to learn the Aggregation Framework.

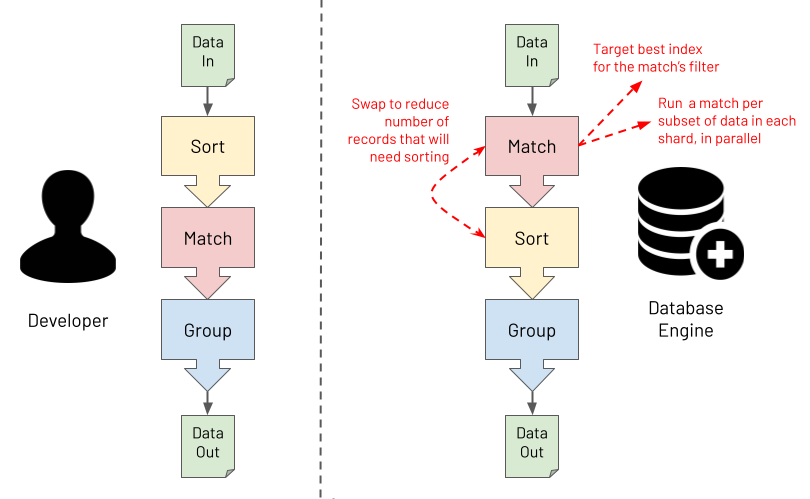

The Aggregation Framework's functional characteristics ultimately make it especially powerful for processing massive data sets. Users focus more on defining "the what" in terms of the required outcome. Users focus less on "the how" of specifying the exact logic to apply to achieve each transformation. You provide one specific and clear advertised purpose for each stage in the pipeline. At runtime, the database engine can then understand the exact intent of each stage. For example, the database engine can obtain clear answers to the questions it asks, such as, "is this stage for performing a filter or is this stage for grouping on some fields?". With this knowledge, the database engine has the opportunity to optimise the pipeline at runtime. The diagram below shows an example of the database performing a pipeline optimisation. It may decide to reorder stages to optimally leverage an index whilst ensuring that the output isn't changed. Or, it may choose to execute some steps in parallel against subsets of the data in different shards, reducing response time whilst again ensuring the output is never changed.

Last and by far least in terms of importance is a discussion about syntax. So far, MongoDB aggregations have been described here as a programming language, which it is (a Domain Specific Language). However, with what syntax is a MongoDB aggregation pipeline constructed? The answer is "it depends", and the answer is mostly irrelevant. This book will highlight pipeline examples using MongoDB's Shell and the JavaScript interpreter it runs in. The book will express aggregation pipelines using a JSON based syntax. However, if you are using one of the many programming language drivers that MongoDB provides, you will be using that language to construct an aggregation pipeline, not JSON. An aggregation is specified as an array of objects, regardless of how the programming language may facilitate this. This programmatic rather than textual format has a couple of advantages compared to querying with a string. It has a low vulnerability to injection attacks, and it is highly composable.

What's In A Name?

You might have realised by now that there doesn't seem to be one single name for the subject of this book. You will often hear:

- Aggregation

- Aggregations

- Aggregation Framework

- Aggregation Pipeline

- Aggregation Pipelines

- Aggregation Language

- Agg

- ...and so on

The reality is that any of these names are acceptable, and it doesn't matter which you use. This book uses most of these terms at some point. Just take it as a positive sign that this MongoDB capability (and its title) was not born in a marketing boardroom. It was built by database engineers, for data engineers, where the branding was an afterthought at best!

What Do People Use The Aggregation Framework For?

The Aggregation Framework is versatile and used for many different data processing and manipulation tasks. Some typical example uses are for:

- Real-time analytics

- Report generation with roll-ups, sums & averages

- Real-time dashboards

- Redacting data to present via views

- Joining data together from different collections on the "server-side"

- Data science, including data discovery and data wrangling

- Mass data analysis at scale (a la "big data")

- Real-time queries where deeper "server-side" data post-processing is required than provided by the MongoDB Query Language (MQL)

- Copying and transforming subsets of data from one collection to another

- Navigating relationships between records, looking for patterns

- Data masking to obfuscate sensitive data

- Performing the Transform (T) part of an Extract-Load-Transform (ELT) workload

- Data quality reporting and cleansing

- Updating a materialised view with the results of the most recent source data changes

- Performing full-text search (using MongoDB's Atlas Search)

- Representing data ready to be exposed via SQL/ODBC/JDBC (using MongoDB's BI Connector)

- Supporting machine learning frameworks for efficient data analysis (e.g. via MongoDB's Spark Connector)

- ...and many more

History Of MongoDB Aggregations

The Emergence Of Aggregations

MongoDB's developers released the first major version of the database (version 1.0) in February 2009. Back then, both users and the predominant company behind the database, MongoDB Inc. (called 10gen at the time) were still establishing the sort of use cases that the database would excel at and where the critical gaps were. Within half a year of this first major release, MongoDB's engineering team had identified a need to enable materialised views to be generated on-demand. Users needed this capability to maintain counts, sums, and averages for their real-time client applications to query. In December 2009, in time for the following major release (1.2), the database engineers introduced a quick tactical solution to address this gap. This solution involved embedding a JavaScript engine in the database and allowing client applications to submit and execute "server-side" logic using a simple Map-Reduce API.

A Map-Reduce workload essentially does two things. Firstly it scans the data set, looking for the matching subset of records required for the given scenario. This phase may also transform or exclude the fields of each record. This is the "map" action. Secondly, it condenses the subset of matched data into grouped, totalled, and averaged result summaries. This is the "reduce" action. Functionally, MongoDB's Map-Reduce capability provides a solution to users' typical data processing requirements, but it comes with the following drawbacks:

- The database has to bolt in an inherently slow JavaScript engine to execute users' Map-Reduce code.

- Users have to provide two sets of JavaScript logic, a map (or matching) function and a reduce (or grouping) function. Neither is very intuitive to develop, lacking a solid data-oriented bias.

- At runtime, the lack of ability to explicitly associate a specific intent to an arbitrary piece of logic means that the database engine has no opportunity to identify and apply optimisations. It is hard for it to target indexes or reorder some logic for more efficient processing. The database has to be conservative, executing the workload with minimal concurrency and employing locks at various times to prevent race conditions and inconsistent results.

- If returning the response to the client application, rather than sending the output to a collection, the response payload must be less than 16MB.

Over the following two years, as user behaviour with Map-Reduce became more understood, MongoDB engineers started to envision a better solution. Also, users were increasingly trying to use Map-Reduce to perform mass data processing given MongoDB's ability to hold large data sets. They were hitting the same Map-Reduce limitations. Users desired a more targeted capability leveraging a data-oriented Domain Specific Language (DSL). The engineers saw how to deliver a framework enabling a developer to define a series of data manipulation steps with valuable composability characteristics. Each step would have a clear advertised intent, allowing the database engine to apply optimisations at runtime. The engineers could also design a framework that would execute "natively" in the database and not require a JavaScript engine. In August 2012, this solution, called the Aggregation Framework, was introduced in the 2.2 version of MongoDB. MongoDB's Aggregation Framework provided a far more powerful, efficient, scalable and easy to use replacement to Map-Reduce.

Within its first year, the Aggregation Framework rapidly became the go-to tool for processing large volumes of data in MongoDB. Now, a decade on, it is like the Aggregation Framework has always been part of MongoDB. It feels like part of the database's core DNA. MongoDB still supports Map-Reduce, but developers rarely use it nowadays. MongoDB aggregation pipelines are always the correct answer for processing data in the database!

It is not widely known, but MongoDB's engineering team re-implemented the Map-Reduce "back-end" in MongoDB 4.4 to execute within the aggregation's runtime. They had to develop additional aggregation stages and operators to fill some gaps. For the most part, these are internal-only stages or operators that the Aggregation Framework does not surface for developers to use in regular aggregations. The two exceptions are the new

$functionand$accumulator4.4 operators, which the refactoring work influenced and which now serve as two helpful operators for use in any aggregation pipeline. In MongoDB 4.4, each Map-Reduce "aggregation" still uses JavaScript for certain phases, and so it will not achieve the performance of a native aggregation for an equivalent workload. Nor does this change magically address the other drawbacks of Map-Reduce workloads concerning composability, concurrency, scalability and opportunities for runtime optimisation. The primary purpose of the change was for the database engineers to eliminate redundancy and promote resiliency in the database's codebase. MongoDB version 5.0 deprecated Map-Reduce, and it is likely to be removed in a future version of MongoDB.

Key Releases & Capabilities

Below is a summary of the evolution of the Aggregation Framework in terms of significant capabilities added in each major release:

- MongoDB 2.2 (August 2012): Initial Release

- MongoDB 2.4 (March 2013): Efficiency improvements (especially for sorts), a concat operator

- MongoDB 2.6 (April 2014): Unlimited size result sets, explain plans, spill to disk for large sorts, an option to output to a new collection, a redact stage

- MongoDB 3.0 (March 2015): Date-to-string operators

- MongoDB 3.2 (December 2015): Sharded cluster optimisations, lookup (join) & sample stages, many new arithmetic & array operators

- MongoDB 3.4 (November 2016): Graph-lookup, bucketing & facets stages, many new array & string operators

- MongoDB 3.6 (November 2017): Array to/from object operators, more extensive date to/from string operators, a REMOVE variable

- MongoDB 4.0 (July 2018): Number to/from string operators, string trimming operators

- MongoDB 4.2 (August 2019): A merge stage to insert/update/replace records in existing non-sharded & sharded collections, set & unset stages to address the verbosity/rigidity of project stages, trigonometry operators, regular expression operators, Atlas Search integration

- MongoDB 4.4 (July 2020): A union stage, custom JavaScript operator expressions (function & accumulator), first & last array element operators, string replacement operators, a random number operator

- MongoDB 5.0 (July 2021): A setWindowFields stage, time-series/window operators, date manipulation operators

- MongoDB 6.0 (July 2022): Support for lookup & graph-lookup stages joining to sharded collections, new densify, documents & fill stages, new array sorting & linearFill operators, new operators to get a subset of ordered arrays or ordered grouped documents

- MongoDB 7.0 (August 2023): A user roles system variable for use in pipelines, new median and percentile operators

Getting Started

For developing aggregation pipelines effectively, and also to try the examples in the second half of this book, you need the following two elements:

- A MongoDB database, version 4.2 or greater, running somewhere which is network accessible from your workstation

- A MongoDB client tool running on your workstation with which to submit aggregation execution requests and to view the results

Note that each example aggregation pipeline shown in the second major part of this book is marked with the minimum version of MongoDB that you must use to execute the pipeline. A few of the example pipelines use aggregation features that MongoDB introduced in releases following version 4.2.

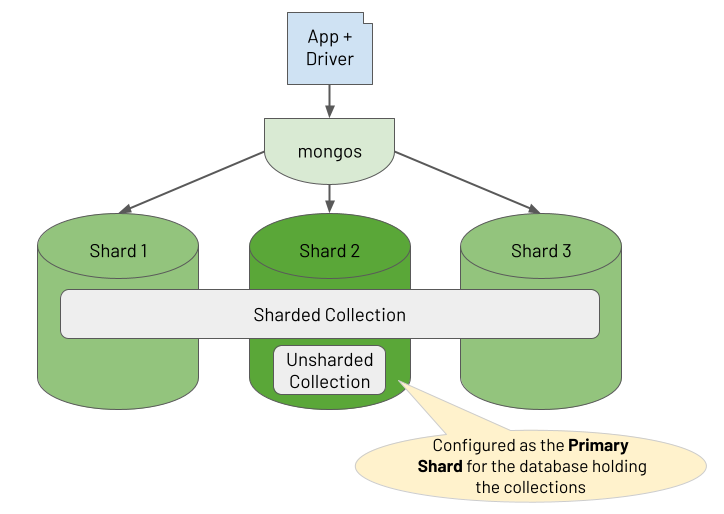

Database

The database deployment for you to connect to can be a single server, a replica set or a sharded cluster. You can run this deployment locally on your workstation or remotely on-prem or in the cloud. It doesn't matter which. You need to know the MongoDB URL for connecting to the database and, if authentication is enabled, the credentials required for full read and write access.

If you don't already have access to a MongoDB database, the two most accessible options for running a database for free are:

- Provision a Free Tier MongoDB Cluster in MongoDB Atlas, which is MongoDB Inc.'s cloud-based Database-as-a-Service (once deployed, in the Atlas Console, there is a button you can click to copy the URL of the cluster)

- Install and run a MongoDB single server locally on your workstation

Note that the aggregation pipelines in the Full-Text Search Examples section leverage Atlas Search. Consequently, you must use Atlas for your database deployment if you want to run those examples.

Client Tool

There are many options for the client tool, some of which are:

- Modern Shell. Install the modern version of MongoDB's command-line tool, the MongoDB Shell:

mongosh - Legacy Shell. Install the legacy version of MongoDB's command-line tool, the Mongo Shell:

mongo(you will often find this binary bundled with a MongoDB database installation) - VS Code. Install MongoDB for VS Code, and use the Playgrounds feature

- Compass. Install the official MongoDB Inc. provided graphical user interface (GUI) tool, MongoDB Compass

- Studio 3T. Install the 3rd party 3T Software Labs provided graphical user interface (GUI) tool, Studio 3T

The book's examples present code in such a way to make it easy to copy and paste into MongoDB's Shell (mongosh or mongo) to execute. All subsequent instructions in this book assume you are using the Shell. However, you will find it straightforward to use one of the mentioned GUI tools instead to consume the code examples. Of the two Shell versions, is it is easier to use and view results with the modern Shell.

MongoDB Shell With Atlas Database

Here is an example of how you can start the modern Shell to connect to an Atlas Free Tier MongoDB Cluster (change the text mongosh to mongo if you are using the legacy Shell):

mongosh "mongodb+srv://mycluster.a123b.mongodb.net/test" --username myuser

Note before running the command above, ensure:

- You have added your workstation's IP address to the Atlas Access List

- You have created a database user for the deployed Atlas cluster, with rights to create, read and write to any database

- You have changed the dummy URL and username text, shown in the above example command, to match your real cluster's details (these details are accessible via the cluster's

Connectbutton in the Atlas Console)

MongoDB Shell With Local Database

Here is an example of how you can start the modern Shell to connect to a MongoDB single server database if you've installed one locally on your workstation (change the text mongosh to mongo if you are using the legacy Shell):

mongosh "mongodb://localhost:27017"

MongoDB For VS Code

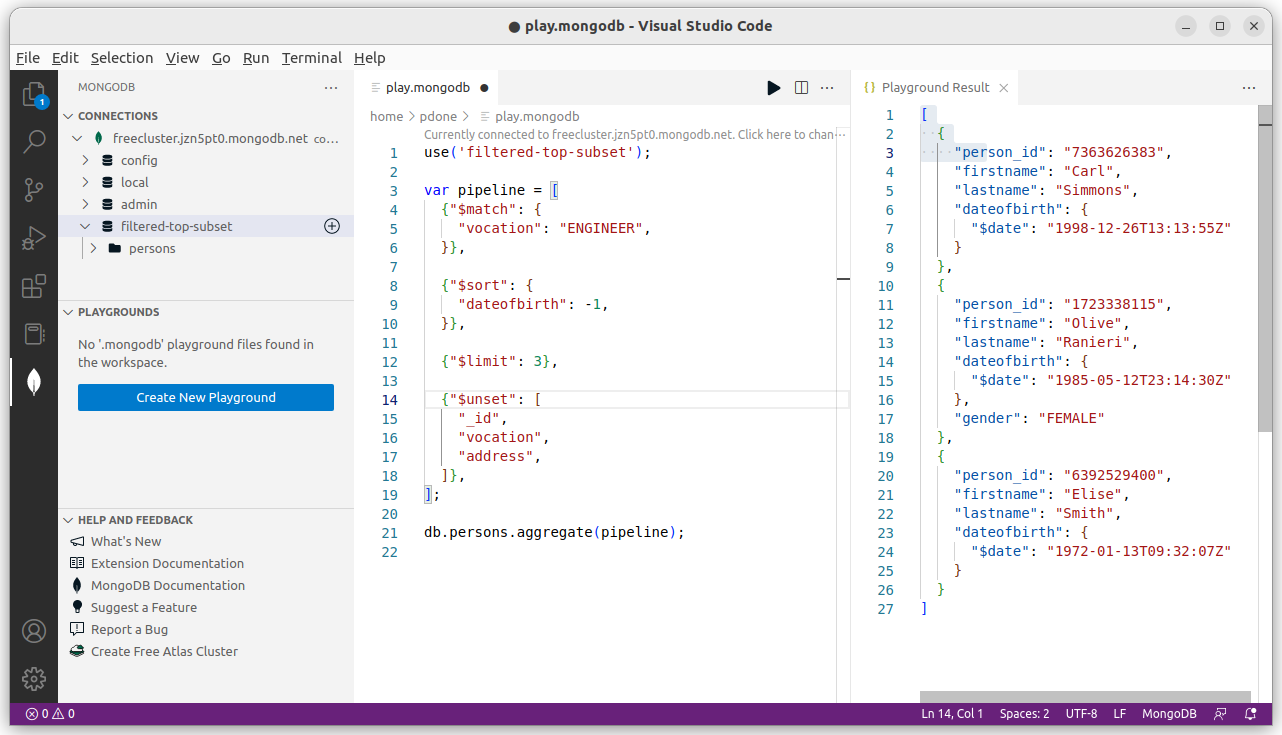

Using the MongoDB playground tool in VS Code, you can quickly prototype queries and aggregation pipelines and then execute them against a MongoDB database with the results shown in an output tab. Below is a screenshot of the playground tool in action:

MongoDB Compass GUI

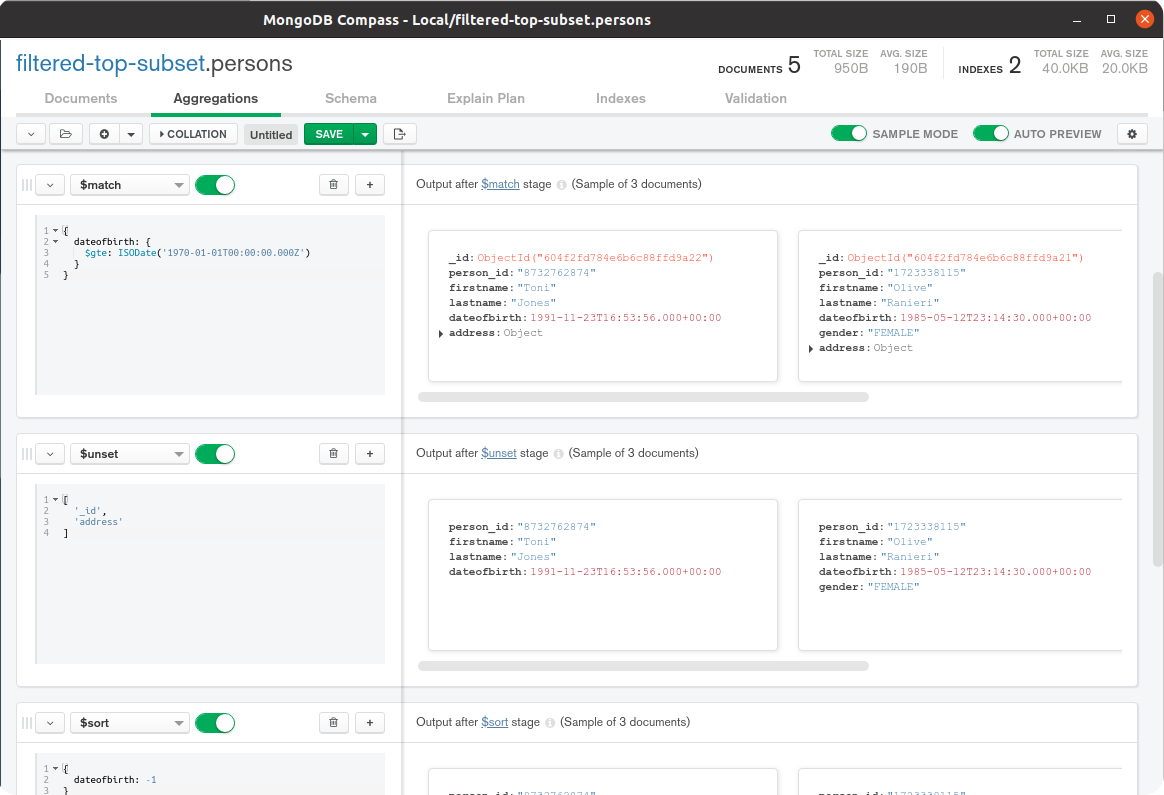

MongoDB Compass provides an Aggregation Pipeline Builder tool to assist users in prototyping and debugging aggregation pipelines and exporting them to different programming languages. Below is a screenshot of the aggregation tool in Compass:

Studio 3T GUI

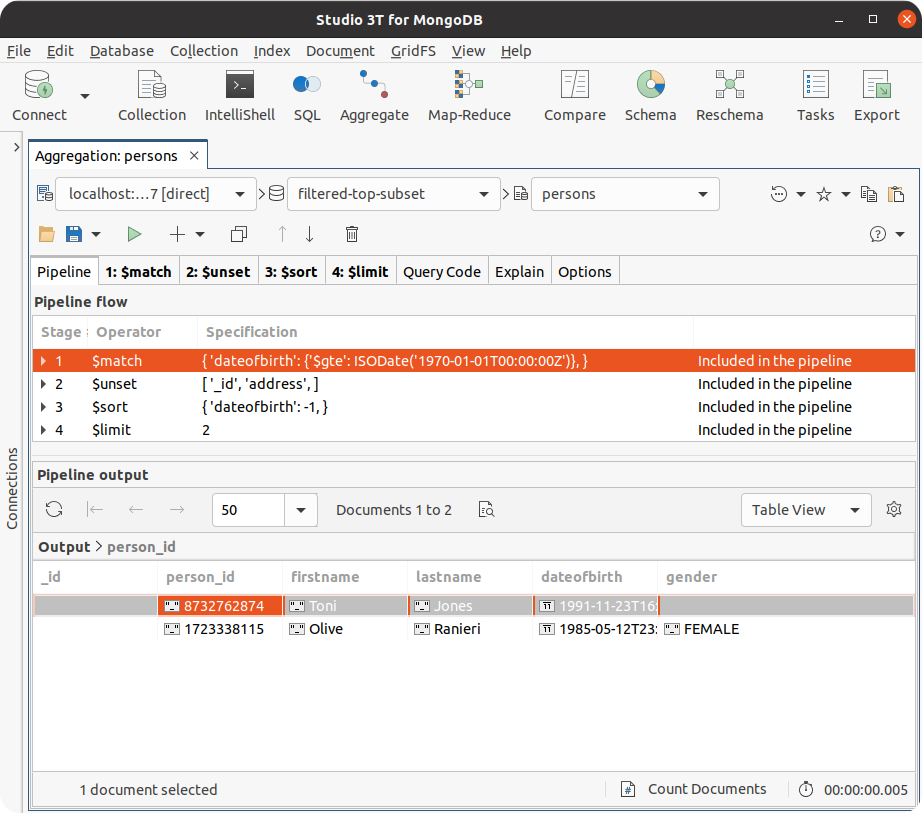

Studio 3T provides an Aggregation Editor tool to help users prototype and debug aggregation pipelines and translate them to different programming languages. Below is a screenshot of the aggregation tool in Studio 3T:

Getting Help

No one can hold the names and syntax of all the different aggregation stages and operators in their heads. I'd bet even MongoDB Aggregations Royalty (Asya Kamsky) couldn't, although I'm sure she would give it a good go!

The good news is there is no need for you to try to remember all the stages & operators. The MongoDB online documentation provides you with a set of excellent references here:

- MongoDB Aggregation Pipeline Stages reference

- MongoDB Aggregation Pipeline Operators reference

To help you get started with the purpose of each stage in the MongoDB Framework, consult the "cheatsheets" in the appendix of this book:

- MongoDB Aggregation Stages Cheatsheet

- MongoDB Aggregation Stages Cheatsheet Source Code

If you are getting stuck with an aggregation pipeline and want some help, an active online community will almost always have the answer. So pose your questions at either:

- The MongoDB Community Forums

- Stack Overflow - MongoDB Questions

You may be asking for just general advice. However, suppose you want to ask for help on a specific aggregation pipeline under development. In that case, you should provide a sample input document, a copy of your current pipeline code (in its JSON syntax format and not a programming language specific format) and an example of the output that you are trying to achieve. If you provide this extra information, you will have a far greater chance of receiving a timely and optimal response.

Guiding Tips & Principles

The following set of chapters provide opinionated yet easy-to-digest principles and approaches for increasing effectiveness, productivity, and performance when developing aggregation pipelines.

Embrace Composability For Increased Productivity

An aggregation pipeline is an ordered series of instructions, called stages. The entire output of one stage forms the whole input of the next stage, and so on, with no side effects. Pipelines exhibit high composability where stages are stateless self-contained components selected and assembled in various combinations (pipelines) to satisfy specific requirements. This composability promotes iterative prototyping, with straightforward testing after each increment.

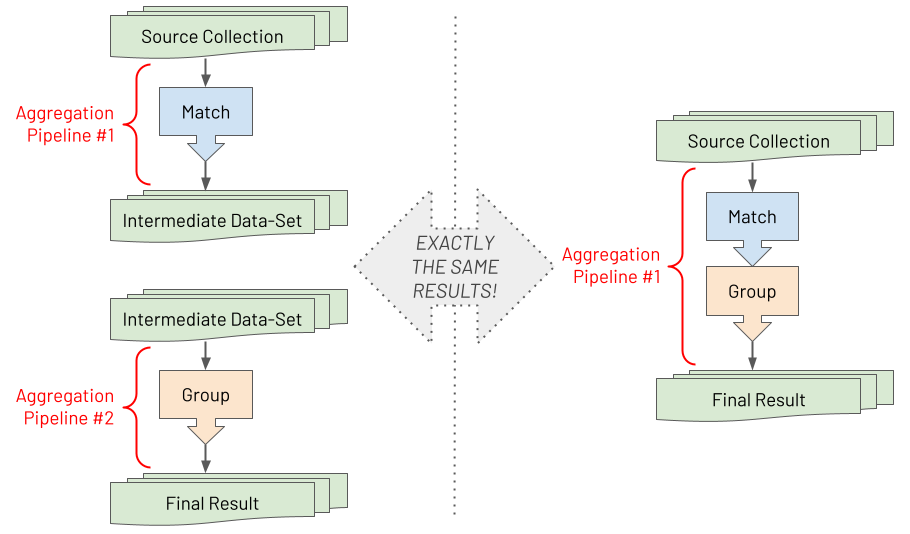

With MongoDB's aggregations, you can take a complex problem, requiring a complex aggregation pipeline, and break it down into straightforward individual stages, where each step can be developed and tested in isolation first. To better comprehend this composability, it may be helpful to internalise the following visual model.

Suppose you have two pipelines with one stage in each. After saving the intermediate results by running the first pipeline, you run the second pipeline against the saved intermediate data set. The final result is the same as running a single pipeline containing both stages serially. There is no difference between the two. As a developer, you can reduce the cognitive load by understanding how a problem can be broken down in this way when building aggregation pipelines. Aggregation pipelines enable you to decompose a big challenge into lots of minor challenges. By embracing this approach of first developing each stage separately, you will find even the most complex challenges become surmountable.

Specific Tips To Promote Composability

In reality, once most developers become adept at using the Aggregation Framework, they tend not to rely on temporary intermediate data sets whilst prototyping each stage. However, it is still a reasonable development approach if you prefer it. Instead, seasoned aggregation pipeline developers typically comment out one or more stages of an aggregation pipeline when using MongoDB's Shell (or they use the "disable stage" capability provided by the GUI tools for MongoDB).

To encourage composability and hence productivity, some of the principles to strive for are:

- Easy disabling of subsets of stages, whilst prototyping or debugging

- Easy addition of new fields to a stage or new stages to a pipeline by performing a copy, a paste and then a modification without hitting cryptic error messages resulting from issues like missing a comma before the added element

- Easy appreciation of each distinct stage's purpose, at a glance

With these principles in mind, the following is an opinionated list of guidelines for how you should textually craft your pipelines in JavaScript to improve your pipeline development pace:

- Don't start or end a stage on the same line as another stage

- For every field in a stage, and stage in a pipeline, include a trailing comma even if it is currently the last item

- Include an empty newline between every stage

- For complex stages include a

//comment with an explanation on a newline before the stage - To "disable" some stages of a pipeline whilst prototyping or debugging another stage, use the multi-line comment

/*prefix and*/suffix

Below is an example of a poor pipeline layout if you have followed none of the guiding principles:

// BAD

var pipeline = [

{"$unset": [

"_id",

"address"

]}, {"$match": {

"dateofbirth": {"$gte": ISODate("1970-01-01T00:00:00Z")}

}}//, {"$sort": {

// "dateofbirth": -1,

//}}, {"$limit": 2}

];

Whereas the following is an example of a far better pipeline layout, where you meet all of the guiding principles:

// GOOD

var pipeline = [

{"$unset": [

"_id",

"address",

]},

// Only match people born on or after 1st January 1970

{"$match": {

"dateofbirth": {"$gte": ISODate("1970-01-01T00:00:00Z")},

}},

/*

{"$sort": {

"dateofbirth": -1,

}},

{"$limit": 2},

*/

];

Notice trailing commas are included in the code snippet, at both the end of stage level and end of field level.

It is worth mentioning that some (but not all) developers take an alternative but an equally valid approach to constructing a pipeline. They decompose each stage in the pipeline into different JavaScript variables, where each stage's variable is defined separately, as shown in the example below:

// GOOD

var unsetStage = {

"$unset": [

"_id",

"address",

]};

var matchStage = {

"$match": {

"dateofbirth": {"$gte": ISODate("1970-01-01T00:00:00Z")},

}};

var sortStage = {

"$sort": {

"dateofbirth": -1,

}};

var limitStage = {"$limit": 2};

var pipeline = [

unsetStage,

matchStage,

sortStage,

limitStage,

];

Furthermore, some developers may take additional steps if they do not intend to transfer the prototyped pipeline to a different programming language:

- They may choose to decompose elements inside a stage into additional JavaScript variables to avoid code "typos". For instance, to prevent one part of a pipeline incorrectly referencing a field computed earlier in the pipeline due to a misspelling.

- They may choose to factor out the generation of some boilerplate code, representing a complex set of expressions, from part of a pipeline into a separate JavaScript function. This new function is essentially a macro. They can then reuse this function from multiple places within the main pipeline's code. Whenever the pipeline invokes this function, the pipeline's body directly embeds the returned boilerplate code. The Array Sorting & Percentiles chapter, later in this book, provides an example of this approach.

In summary, this book is not advocating a multi-variable approach over a single-variable approach when you define a pipeline. The book is just highlighting two highly composable options. Ultimately it is a personal choice concerning which you find most comfortable and productive.

Better Alternatives To A Project Stage

The quintessential tool used in MongoDB's Query Language (MQL) to define or restrict fields to return is a projection. In the MongoDB Aggregation Framework, the analogous facility for specifying fields to include or exclude is the $project stage. For many earlier versions of MongoDB, this was the only tool to define which fields to keep or omit. However, $project comes with a few usability challenges:

-

$projectis confusing and non-intuitive. You can only choose to include fields or exclude fields in a single stage, but not both. There is one exception, though, where you can exclude the _id field yet still define other fields to include (this only applies to the _id field). It's as if$projecthas an identity crisis. -

$projectis verbose and inflexible. If you want to define one new field or revise one field, you will have to name all other fields in the projection to include. If each input record has 100 fields and the pipeline needs to employ a$projectstage for the first time, things become tiresome. To include a new 101st field, you now also have to name all the original 100 fields in this new$projectstage too. You will find this irritating if you have an evolving data model, where additional new fields appear in some records over time. Because you use a$projectfor inclusion, then each time a new field appears in the data set, you must go back to the old aggregation pipeline to modify it to name the new field explicitly for inclusion in the results. This is the antithesis of flexibility and agility.

In MongoDB version 4.2, the $set and $unset stages were introduced, which, in most cases, are preferable to using $project for declaring field inclusion and exclusion. They make the code's intent much clearer, lead to less verbose pipelines, and, critically, they reduce the need to refactor a pipeline whenever the data model evolves. How this works and guidance on when to use $set & $unset stages is described in the section When To Use Set & Unset, further below.

Despite the challenges, though, there are some specific situations where using $project is advantageous over $set/$unset. These situations are described in the section When To Use Project further below.

MongoDB version 3.4 addressed some of the disadvantages of

$projectby introducing a new$addFieldsstage, which has the same behaviour as$set.$setcame later than$addFieldsand$setis actually just an alias for$addFields. Both$setand$unsetstages are available in modern versions of MongoDB, and their counter purposes are obvious to deduce by their names ($setVs$unset). The name$addFieldsdoesn't fully reflect that you can modify existing fields rather than just adding new fields. This book prefers$setover$addFieldsto help promote consistency and avoid any confusion of intent. However, if you are wedded to$addFields, use that instead, as there is no behavioural difference.

When To Use $set & $unset

You should use $set & $unset stages when you need to retain most of the fields in the input records, and you want to add, modify or remove a minority subset of fields. This is the case for most uses of aggregation pipelines.

For example, imagine there is a collection of credit card payment documents similar to the following:

// INPUT (a record from the source collection to be operated on by an aggregation)

{

_id: ObjectId("6044faa70b2c21f8705d8954"),

card_name: "Mrs. Jane A. Doe",

card_num: "1234567890123456",

card_expiry: "2023-08-31T23:59:59.736Z",

card_sec_code: "123",

card_provider_name: "Credit MasterCard Gold",

transaction_id: "eb1bd77836e8713656d9bf2debba8900",

transaction_date: ISODate("2021-01-13T09:32:07.000Z"),

transaction_curncy_code: "GBP",

transaction_amount: NumberDecimal("501.98"),

reported: true

}

Then imagine an aggregation pipeline is required to produce modified versions of the documents, as shown below:

// OUTPUT (a record in the results of the executed aggregation)

{

card_name: "Mrs. Jane A. Doe",

card_num: "1234567890123456",

card_expiry: ISODate("2023-08-31T23:59:59.736Z"), // Field type converted from text

card_sec_code: "123",

card_provider_name: "Credit MasterCard Gold",

transaction_id: "eb1bd77836e8713656d9bf2debba8900",

transaction_date: ISODate("2021-01-13T09:32:07.000Z"),

transaction_curncy_code: "GBP",

transaction_amount: NumberDecimal("501.98"),

reported: true,

card_type: "CREDIT" // New added literal value field

}

Here, shown by the // comments, there was a requirement to modify each document's structure slightly, to convert the card_expiry text field into a proper date field, and add a new card_type field, set to the value "CREDIT", for every record.

Naively you might decide to build an aggregation pipeline using a $project stage to achieve this transformation, which would probably look similar to the following:

// BAD

[

{"$project": {

// Modify a field + add a new field

"card_expiry": {"$dateFromString": {"dateString": "$card_expiry"}},

"card_type": "CREDIT",

// Must now name all the other fields for those fields to be retained

"card_name": 1,

"card_num": 1,

"card_sec_code": 1,

"card_provider_name": 1,

"transaction_id": 1,

"transaction_date": 1,

"transaction_curncy_code": 1,

"transaction_amount": 1,

"reported": 1,

// Remove _id field

"_id": 0,

}},

]

As you can see, the pipeline's stage is quite lengthy, and because you use a $project stage to modify/add two fields, you must also explicitly name each other existing field from the source records for inclusion. Otherwise, you will lose those fields during the transformation. Imagine if each payment document has hundreds of possible fields, rather than just ten!

A better approach to building the aggregation pipeline, to achieve the same results, would be to use $set and $unset instead, as shown below:

// GOOD

[

{"$set": {

// Modified + new field

"card_expiry": {"$dateFromString": {"dateString": "$card_expiry"}},

"card_type": "CREDIT",

}},

{"$unset": [

// Remove _id field

"_id",

]},

]

This time, when you need to add new documents to the collection of existing payments, which include additional new fields, e.g. settlement_date & settlement_curncy_code, no changes are required. The existing aggregation pipeline allows these new fields to appear in the results automatically. However, when using $project, each time the possibility of a new field arises, a developer must first refactor the pipeline to incorporate an additional inclusion declaration (e.g. "settlement_date": 1, or "settlement_curncy_code": 1).

When To Use $project

It is best to use a $project stage when the required shape of output documents is very different from the input documents' shape. This situation often arises when you do not need to include most of the original fields.

This time for the same input payments collection, let us imagine you require a new aggregation pipeline to produce result documents. You need each output document's structure to be very different from the input structure, and you need to retain far fewer original fields, similar to the following:

// OUTPUT (a record in the results of the executed aggregation)

{

transaction_info: {

date: ISODate("2021-01-13T09:32:07.000Z"),

amount: NumberDecimal("501.98")

},

status: "REPORTED"

}

Using $set/$unset in the pipeline to achieve this output structure would be verbose and would require naming all the fields (for exclusion this time), as shown below:

// BAD

[

{"$set": {

// Add some fields

"transaction_info.date": "$transaction_date",

"transaction_info.amount": "$transaction_amount",

"status": {"$cond": {"if": "$reported", "then": "REPORTED", "else": "UNREPORTED"}},

}},

{"$unset": [

// Remove _id field

"_id",

// Must name all other existing fields to be omitted

"card_name",

"card_num",

"card_expiry",

"card_sec_code",

"card_provider_name",

"transaction_id",

"transaction_date",

"transaction_curncy_code",

"transaction_amount",

"reported",

]},

]

However, by using $project for this specific aggregation, as shown below, to achieve the same results, the pipeline will be less verbose. The pipeline will have the flexibility of not requiring modification if you ever make subsequent additions to the data model, with new previously unknown fields:

// GOOD

[

{"$project": {

// Add some fields

"transaction_info.date": "$transaction_date",

"transaction_info.amount": "$transaction_amount",

"status": {"$cond": {"if": "$reported", "then": "REPORTED", "else": "UNREPORTED"}},

// Remove _id field

"_id": 0,

}},

]

Another potential downside can occur when using

$projectto define field inclusion, rather than using$set(or$addFields). When using$projectto declare all required fields for inclusion, it can be easy for you to carelessly specify more fields from the source data than intended. Later on, if the pipeline contains something like a$groupstage, this will cover up your mistake. The final aggregation's output will not include the erroneous field in the output. You might ask, "Why is this a problem?". Well, what happens if you intended for the aggregation to take advantage of a covered index query for the few fields it requires, to avoid unnecessarily accessing the raw documents. In most cases, MongoDB's aggregation engine can track fields' dependencies throughout a pipeline and, left to its own devices, can understand which fields are not required. However, you would be overriding this capability by explicitly asking for the extra field. A common error is to forget to exclude the_idfield in the projection inclusion stage, and so it will be included by default. This mistake will silently kill the potential optimisation. If you must use a$projectstage, try to use it as late as possible in the pipeline because it is then clear to you precisely what you are asking for as the aggregation's final output. Also, unnecessary fields like_idmay already have been identified by the aggregation engine as no longer required, due to the occurrence of an earlier$groupstage, for example.

Main Takeaway

In summary, you should always look to use $set (or $addFields) and $unset for field inclusion and exclusion, rather than $project. The main exception is if you have an obvious requirement for a very different structure for result documents, where you only need to retain a small subset of the input fields.

Using Explain Plans

When using the MongoDB Query Language (MQL) to develop queries, it is important to view the explain plan for a query to determine if you've used the appropriate index and if you need to optimise other aspects of the query or the data model. An explain plan allows you to fully understand the performance implications of the query you have created.

The same applies to aggregation pipelines and the ability to view an explain plan for the executed pipeline. However, with aggregations, an explain plan tends to be even more critical because considerably more complex logic can be assembled and run in the database. There are far more opportunities for performance bottlenecks to occur, requiring optimisation.

The MongoDB database engine will do its best to apply its own aggregation pipeline optimisations at runtime. Nevertheless, there could be some optimisations that only you can make. A database engine should never optimise a pipeline in such a way as to risk changing the functional behaviour and outcome of the pipeline. The database engine doesn't always have the extra context that your brain has, relating to the actual business problem at hand. It may not be able to make some types of judgement calls about what pipeline changes to apply to make it run faster. The availability of an explain plan for aggregations enables you to bridge this gap. It allows you to understand the database engine's applied optimisations and detect further potential optimisations you can manually implement in the pipeline.

Viewing An Explain Plan

To view the explain plan for an aggregation pipeline, you can execute commands such as the following:

db.coll.explain().aggregate([{"$match": {"name": "Jo"}}]);

In this book, you will already have seen the convention used to firstly define a separate variable for the pipeline, followed by the call to the aggregate() function, passing in the pipeline argument, as shown here:

db.coll.aggregate(pipeline);

By adopting this approach, it's easier for you to use the same pipeline definition interchangeably with different commands. Whilst prototyping and debugging a pipeline, it is handy for you to be able to quickly switch from executing the pipeline to instead generating the explain plan for the same defined pipeline, as follows:

db.coll.explain().aggregate(pipeline);

As with MQL, there are three different verbosity modes that you can generate an explain plan with, as shown below:

// QueryPlanner verbosity (default if no verbosity parameter provided)

db.coll.explain("queryPlanner").aggregate(pipeline);

// ExecutionStats verbosity

db.coll.explain("executionStats").aggregate(pipeline);

// AllPlansExecution verbosity

db.coll.explain("allPlansExecution").aggregate(pipeline);

In most cases, you will find that running the executionStats variant is the most informative mode. Rather than showing just the query planner's thought process, it also provides actual statistics on the "winning" execution plan (e.g. the total keys examined, the total docs examined, etc.). However, this isn't the default because it actually executes the aggregation in addition to formulating the query plan. If the source collection is large or the pipeline is suboptimal, it will take a while to return the explain plan result.

Note, the aggregate() function also provides a vestigial explain option to ask for an explain plan to be generated and returned. Nonetheless, this is more limited and cumbersome to use, so you should avoid it.

Understanding The Explain Plan

To provide an example, let us assume a shop's data set includes information on each customer and what retail orders the customer has made over the years. The customer orders collection contains documents similar to the following example:

{

"customer_id": "elise_smith@myemail.com",

"orders": [

{

"orderdate": ISODate("2020-01-13T09:32:07Z"),

"product_type": "GARDEN",

"value": NumberDecimal("99.99")

},

{

"orderdate": ISODate("2020-05-30T08:35:52Z"),

"product_type": "ELECTRONICS",

"value": NumberDecimal("231.43")

}

]

}

You've defined an index on the customer_id field. You create the following aggregation pipeline to show the three most expensive orders made by a customer whose ID is tonijones@myemail.com, as shown below:

var pipeline = [

// Unpack each order from customer orders array as a new separate record

{"$unwind": {

"path": "$orders",

}},

// Match on only one customer

{"$match": {

"customer_id": "tonijones@myemail.com",

}},

// Sort customer's purchases by most expensive first

{"$sort" : {

"orders.value" : -1,

}},

// Show only the top 3 most expensive purchases

{"$limit" : 3},

// Use the order's value as a top level field

{"$set": {

"order_value": "$orders.value",

}},

// Drop the document's id and orders sub-document from the results

{"$unset" : [

"_id",

"orders",

]},

];

Upon executing this aggregation against an extensive sample data set, you receive the following result:

[

{

customer_id: 'tonijones@myemail.com',

order_value: NumberDecimal("1024.89")

},

{

customer_id: 'tonijones@myemail.com',

order_value: NumberDecimal("187.99")

},

{

customer_id: 'tonijones@myemail.com',

order_value: NumberDecimal("4.59")

}

]

You then request the query planner part of the explain plan:

db.customer_orders.explain("queryPlanner").aggregate(pipeline);

The query plan output for this pipeline shows the following (excluding some information for brevity):

stages: [

{

'$cursor': {

queryPlanner: {

parsedQuery: { customer_id: { '$eq': 'tonijones@myemail.com' } },

winningPlan: {

stage: 'FETCH',

inputStage: {

stage: 'IXSCAN',

keyPattern: { customer_id: 1 },

indexName: 'customer_id_1',

direction: 'forward',

indexBounds: {

customer_id: [

'["tonijones@myemail.com", "tonijones@myemail.com"]'

]

}

}

},

}

}

},

{ '$unwind': { path: '$orders' } },

{ '$sort': { sortKey: { 'orders.value': -1 }, limit: 3 } },

{ '$set': { order_value: '$orders.value' } },

{ '$project': { _id: false, orders: false } }

]

You can deduce some illuminating insights from this query plan:

-

To optimise the aggregation, the database engine has reordered the pipeline positioning the filter belonging to the

$matchto the top of the pipeline. The database engine moves the content of$matchahead of the$unwindstage without changing the aggregation's functional behaviour or outcome. -

The first stage of the database optimised version of the pipeline is an internal

$cursorstage, regardless of the order you placed the pipeline stages in. The$cursorruntime stage is always the first action executed for any aggregation. Under the covers, the aggregation engine reuses the MQL query engine to perform a "regular" query against the collection, with a filter based on the aggregation's$matchcontents. The aggregation runtime uses the resulting query cursor to pull batches of records. This is similar to how a client application with a MongoDB driver uses a query cursor when remotely invoking an MQL query to pull batches. As with a normal MQL query, the regular database query engine will try to use an index if it makes sense. In this case an index is indeed leveraged, as is visible in the embedded$queryPlannermetadata, showing the"stage" : "IXSCAN"element and the index used,"indexName" : "customer_id_1". -

To further optimise the aggregation, the database engine has collapsed the

$sortand$limitinto a single special internal sort stage which can perform both actions in one go. In this situation, during the sorting process, the aggregation engine only has to track the current three most expensive orders in memory. It does not have to hold the whole data set in memory when sorting, which may otherwise be resource prohibitive in many scenarios, requiring more RAM than is available.

You might also want to see the execution stats part of the explain plan. The specific new information shown in executionStats, versus the default of queryPlanner, is identical to the normal MQL explain plan returned for a regular find() operation. Consequently, for aggregations, similar principles to MQL apply for spotting things like "have I used the optimal index?" and "does my data model lend itself to efficiently processing this query?".

You ask for the execution stats part of the explain plan:

db.customer_orders.explain("executionStats").aggregate(pipeline);

Below is a redacted example of the output you will see, highlighting some of the most relevant metadata elements you should generally focus on.

executionStats: {

nReturned: 1,

totalKeysExamined: 1,

totalDocsExamined: 1,

executionStages: {

stage: 'FETCH',

nReturned: 1,

works: 2,

advanced: 1,

docsExamined: 1,

inputStage: {

stage: 'IXSCAN',

nReturned: 1,

works: 2,

advanced: 1,

keyPattern: { customer_id: 1 },

indexName: 'customer_id_1',

direction: 'forward',

indexBounds: {

customer_id: [

'["tonijones@myemail.com", "tonijones@myemail.com"]'

]

},

keysExamined: 1,

}

}

}

Here, this part of the plan also shows that the aggregation uses the existing index. Because totalKeysExamined and totalDocsExamined match, the aggregation fully leverages this index to identify the required records, which is good news. Nevertheless, the targeted index doesn't necessarily mean the aggregation's query part is fully optimised. For example, if there is the need to reduce latency further, you can do some analysis to determine if the index can completely cover the query. Suppose the cursor query part of the aggregation is satisfied entirely using the index and does not have to examine any raw documents. In that case, you will see totalDocsExamined: 0 in the explain plan.

Pipeline Performance Considerations

Similar to any programming language, there is a downside if you prematurely optimise an aggregation pipeline. You risk producing an over-complicated solution that doesn't address the performance challenges that will manifest. As described in the previous chapter, Using Explain Plans, the tool you should use to identify opportunities for optimisation is the explain plan. You will typically use the explain plan during the final stages of your pipeline's development once it is functionally correct.

With all that said, it can still help you to be aware of some guiding principles regarding performance whilst you are prototyping a pipeline. Critically, such guiding principles will be invaluable to you once the aggregation's explain plan is analysed and if it shows that the current pipeline is sub-optimal.

This chapter outlines three crucial tips to assist you when creating and tuning an aggregation pipeline. For sizeable data sets, adopting these principles may mean the difference between aggregations completing in a few seconds versus minutes, hours or even longer.

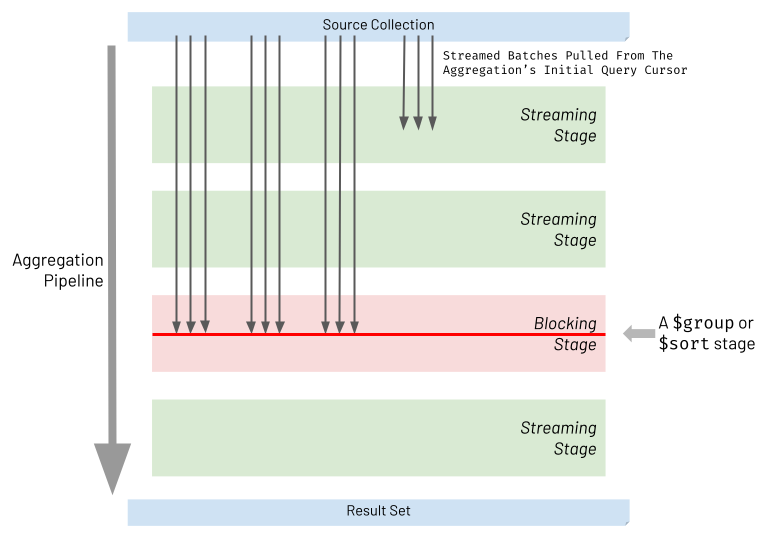

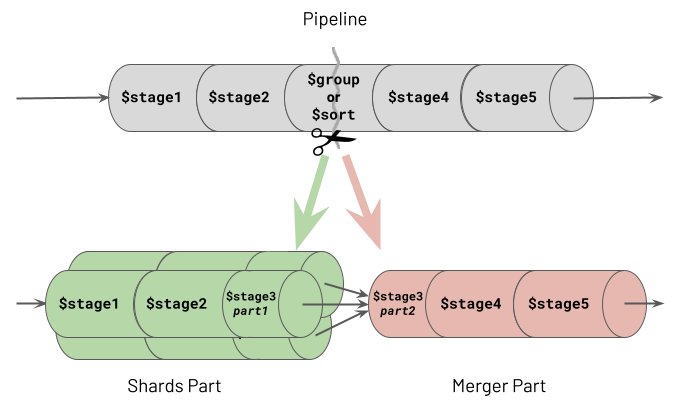

1. Be Cognizant Of Streaming Vs Blocking Stages Ordering

When executing an aggregation pipeline, the database engine pulls batches of records from the initial query cursor generated against the source collection. The database engine then attempts to stream each batch through the aggregation pipeline stages. For most types of stages, referred to as streaming stages, the database engine will take the processed batch from one stage and immediately stream it into the next part of the pipeline. It will do this without waiting for all the other batches to arrive at the prior stage. However, two types of stages must block and wait for all batches to arrive and accumulate together at that stage. These two stages are referred to as blocking stages and specifically, the two types of stages that block are:

$sort$group*

* actually when stating

$group, this also includes other less frequently used "grouping" stages too, specifically:$bucket,$bucketAuto,$count,$sortByCount&$facet(it's a stretch to call$faceta group stage, but in the context of this topic, it's best to think of it that way)

The diagram below highlights the nature of streaming and blocking stages. Streaming stages allow batches to be processed and then passed through without waiting. Blocking stages wait for the whole of the input data set to arrive and accumulate before processing all this data together.

When considering $sort and $group stages, it becomes evident why they have to block. The following examples illustrate why this is the case:

-

$sortblocking example: A pipeline must sort people in ascending order of age. If the stage only sorts each batch's content before passing the batch on to the pipeline's result, only individual batches of output records are sorted by age but not the whole result set. -

$groupblocking example: A pipeline must group employees by one of two work departments (either the sales or manufacturing departments). If the stage only groups employees for a batch, before passing it on, the final result contains the work departments repeated multiple times. Each duplicate department consists of some but not all of its employees.

These often unavoidable blocking stages don't just increase aggregation execution time by reducing concurrency. If used without careful forethought, the throughput and latency of a pipeline will slow dramatically due to significantly increased memory consumption. The following sub-sections explore why this occurs and tactics to mitigate this.

$sort Memory Consumption And Mitigation

Used naïvely, a $sort stage will need to see all the input records at once, and so the host server must have enough capacity to hold all the input data in memory. The amount of memory required depends heavily on the initial data size and the degree to which the prior stages can reduce the size. Also, multiple instances of the aggregation pipeline may be in-flight at any one time, in addition to other database workloads. These all compete for the same finite memory. Suppose the source data set is many gigabytes or even terabytes in size, and earlier pipeline stages have not reduced this size significantly. It will be unlikely that the host machine has sufficient memory to support the pipeline's blocking $sort stage. Therefore, MongoDB enforces that every blocking stage is limited to 100 MB of consumed RAM. The database throws an error if it exceeds this limit.

To avoid the memory limit obstacle, you can set the allowDiskUse:true option for the overall aggregation for handling large result data sets. Consequently, the pipeline's sort operation spills to disk if required, and the 100 MB limit no longer constrains the pipeline. However, the sacrifice here is significantly higher latency, and the execution time is likely to increase by orders of magnitude.

To circumvent the aggregation needing to manifest the whole data set in memory or overspill to disk, attempt to refactor your pipeline to incorporate one of the following approaches (in order of most effective first):

-

Use Index Sort. If the

$sortstage does not depend on a$unwind,$groupor$projectstage preceding it, move the$sortstage to near the start of your pipeline to target an index for the sort. The aggregation runtime does not need to perform an expensive in-memory sort operation as a result. The$sortstage won't necessarily be the first stage in your pipeline because there may also be a$matchstage that takes advantage of the same index. Always inspect the explain plan to ensure you are inducing the intended behaviour. -

Use Limit With Sort. If you only need the first subset of records from the sorted set of data, add a

$limitstage directly after the$sortstage, limiting the results to the fixed amount you require (e.g. 10). At runtime, the aggregation engine will collapse the$sortand$limitinto a single special internal sort stage which performs both actions together. The in-flight sort process only has to track the ten records in memory, which currently satisfy the executing sort/limit rule. It does not have to hold the whole data set in memory to execute the sort successfully. -

Reduce Records To Sort. Move the

$sortstage to as late as possible in your pipeline and ensure earlier stages significantly reduce the number of records streaming into this late blocking$sortstage. This blocking stage will have fewer records to process and less thirst for RAM.

$group Memory Consumption And Mitigation

Like the $sort stage, the $group stage has the potential to consume a large amount of memory. The aggregation pipeline's 100 MB RAM limit for blocking stages applies equally to the $group stage because it will potentially pressure the host's memory capacity. As with sorting, you can use the pipeline's allowDiskUse:true option to avoid this limit for heavyweight grouping operations, but with the same downsides.

In reality, most grouping scenarios focus on accumulating summary data such as totals, counts, averages, highs and lows, and not itemised data. In these situations, considerably reduced result data sets are produced, requiring far less processing memory than a $sort stage. Contrary to many sorting scenarios, grouping operations will typically demand a fraction of the host's RAM.

To ensure you avoid excessive memory consumption when you are looking to use a $group stage, adopt the following principles:

-

Avoid Unnecessary Grouping. This chapter covers this recommendation in far greater detail in the section 2. Avoid Unwinding & Regrouping Documents Just To Process Array Elements.

-

Group Summary Data Only. If the use case permits it, use the group stage to accumulate things like totals, counts and summary roll-ups only, rather than holding all the raw data of each record belonging to a group. The Aggregation Framework provides a robust set of accumulator operators to help you achieve this inside a

$groupstage.

2. Avoid Unwinding & Regrouping Documents Just To Process Array Elements

Sometimes, you need an aggregation pipeline to mutate or reduce an array field's content for each record. For example:

- You may need to add together all the values in the array into a total field

- You may need to retain the first and last elements of the array only

- You may need to retain only one recurring field for each sub-document in the array

- ...or numerous other array "reduction" scenarios

To bring this to life, imagine a retail orders collection where each document contains an array of products purchased as part of the order, as shown in the example below:

[

{

_id: 1197372932325,

products: [

{

prod_id: 'abc12345',

name: 'Asus Laptop',

price: NumberDecimal('429.99')

}

]

},

{

_id: 4433997244387,

products: [

{

prod_id: 'def45678',

name: 'Karcher Hose Set',

price: NumberDecimal('23.43')

},

{

prod_id: 'jkl77336',

name: 'Picky Pencil Sharpener',

price: NumberDecimal('0.67')

},

{

prod_id: 'xyz11228',

name: 'Russell Hobbs Chrome Kettle',

price: NumberDecimal('15.76')

}

]

}

]

The retailer wants to see a report of all the orders but only containing the expensive products purchased by customers (e.g. having just products priced greater than 15 dollars). Consequently, an aggregation is required to filter out the inexpensive product items of each order's array. The desired aggregation output might be:

[

{

_id: 1197372932325,

products: [

{

prod_id: 'abc12345',

name: 'Asus Laptop',

price: NumberDecimal('429.99')

}

]

},

{

_id: 4433997244387,

products: [

{

prod_id: 'def45678',

name: 'Karcher Hose Set',

price: NumberDecimal('23.43')

},

{

prod_id: 'xyz11228',

name: 'Russell Hobbs Chrome Kettle',

price: NumberDecimal('15.76')

}

]

}

]

Notice order 4433997244387 now only shows two products and is missing the inexpensive product.

One naïve way of achieving this transformation is to unwind the products array of each order document to produce an intermediate set of individual product records. These records can then be matched to retain products priced greater than 15 dollars. Finally, the products can be grouped back together again by each order's _id field. The required pipeline to achieve this is below:

// SUBOPTIMAL

var pipeline = [

// Unpack each product from the each order's product as a new separate record

{"$unwind": {

"path": "$products",

}},

// Match only products valued over 15.00

{"$match": {

"products.price": {

"$gt": NumberDecimal("15.00"),

},

}},

// Group by product type

{"$group": {

"_id": "$_id",

"products": {"$push": "$products"},

}},

];

This pipeline is suboptimal because a $group stage has been introduced, which is a blocking stage, as outlined earlier in this chapter. Both memory consumption and execution time will increase significantly, which could be fatal for a large input data set. There is a far better alternative by using one of the Array Operators instead. Array Operators are sometimes less intuitive to code, but they avoid introducing a blocking stage into the pipeline. Consequently, they are significantly more efficient, especially for large data sets. Shown below is a far more economical pipeline, using the $filter array operator, rather than the $unwind/$match/$group combination, to produce the same outcome:

// OPTIMAL

var pipeline = [

// Filter out products valued 15.00 or less

{"$set": {

"products": {

"$filter": {

"input": "$products",

"as": "product",

"cond": {"$gt": ["$$product.price", NumberDecimal("15.00")]},

}

},

}},

];

Unlike the suboptimal pipeline, the optimal pipeline will include "empty orders" in the results for those orders that contained only inexpensive items. If this is a problem, you can include a simple $match stage at the start of the optimal pipeline with the same content as the $match stage shown in the suboptimal example.

To reiterate, there should never be the need to use an $unwind/$group combination in an aggregation pipeline to transform an array field's elements for each document in isolation. One way to recognise this anti-pattern is if your pipeline contains a $group on a $_id field. Instead, use Array Operators to avoid introducing a blocking stage. Otherwise, you will suffer a magnitude increase in execution time when the blocking group stage in your pipeline handles more than 100 MB of in-flight data. Adopting this best practice may mean the difference between achieving the required business outcome and abandoning the whole task as unachievable.

The primary use of an $unwind/$group combination is to correlate patterns across many records' arrays rather than transforming the content within each input record's array only. For an illustration of an appropriate use of $unwind/$group refer to this book's Unpack Array & Group Differently example.

3. Encourage Match Filters To Appear Early In The Pipeline

Explore If Bringing Forward A Full Match Is Possible

As discussed, the database engine will do its best to optimise the aggregation pipeline at runtime, with a particular focus on attempting to move the $match stages to the top of the pipeline. Top-level $match content will form part of the filter that the engine first executes as the initial query. The aggregation then has the best chance of leveraging an index. However, it may not always be possible to promote $match filters in such a way without changing the meaning and resulting output of an aggregation.

Sometimes, a $match stage is defined later in a pipeline to perform a filter on a field that the pipeline computed in an earlier stage. The computed field isn't present in the pipeline's original input collection. Some examples are:

-

A pipeline where a

$groupstage creates a newtotalfield based on an accumulator operator. Later in the pipeline, a$matchstage filters groups where each group'stotalis greater than1000. -

A pipeline where a

$setstage computes a newtotalfield value based on adding up all the elements of an array field in each document. Later in the pipeline, a$matchstage filters documents where thetotalis less than50.

At first glance, it may seem like the match on the computed field is irreversibly trapped behind an earlier stage that computed the field's value. Indeed the aggregation engine cannot automatically optimise this further. In some situations, though, there may be a missed opportunity where beneficial refactoring is possible by you, the developer.

Take the following trivial example of a collection of customer order documents:

[

{

customer_id: 'elise_smith@myemail.com',

orderdate: ISODate('2020-05-30T08:35:52.000Z'),

value: NumberDecimal('9999')

}

{

customer_id: 'elise_smith@myemail.com',

orderdate: ISODate('2020-01-13T09:32:07.000Z'),

value: NumberDecimal('10101')

}

]

Let's assume the orders are in a Dollars currency, and each value field shows the order's value in cents. You may have built a pipeline to display all orders where the value is greater than 100 dollars like below:

// SUBOPTIMAL

var pipeline = [

{"$set": {

"value_dollars": {"$multiply": [0.01, "$value"]}, // Converts cents to dollars

}},

{"$unset": [

"_id",

"value",

]},

{"$match": {

"value_dollars": {"$gte": 100}, // Peforms a dollar check

}},

];

The collection has an index defined for the value field (in cents). However, the $match filter uses a computed field, value_dollars. When you view the explain plan, you will see the pipeline does not leverage the index. The $match is trapped behind the $set stage (which computes the field) and cannot be moved to the pipeline's start. MongoDB's aggregation engine tracks a field's dependencies across multiple stages in a pipeline. It can establish how far up the pipeline it can promote fields without risking a change in the aggregation's behaviour. In this case, it knows that if it moves the $match stage ahead of the $set stage, it depends on, things will not work correctly.

In this example, as a developer, you can easily make a pipeline modification that will enable this pipeline to be more optimal without changing the pipeline's intended outcome. Change the $match filter to be based on the source field value instead (greater than 10000 cents), rather than the computed field (greater than 100 dollars). Also, ensure the $match stage appears before the $unset stage (which removes the value field). This change is enough to allow the pipeline to run efficiently. Below is how the pipeline looks after you have made this change:

// OPTIMAL

var pipeline = [

{"$set": {

"value_dollars": {"$multiply": [0.01, "$value"]},

}},

{"$match": { // Moved to before the $unset

"value": {"$gte": 10000}, // Changed to perform a cents check

}},

{"$unset": [

"_id",

"value",

]},

];

This pipeline produces the same data output. However, when you look at its explain plan, it shows the database engine has pushed the $match filter to the top of the pipeline and used an index on the value field. The aggregation is now optimal because the $match stage is no longer "blocked" by its dependency on the computed field.

Explore If Bringing Forward A Partial Match Is Possible

There may be some cases where you can't unravel a computed value in such a manner. However, it may still be possible for you to include an additional $match stage, to perform a partial match targeting the aggregation's query cursor. Suppose you have a pipeline that masks the values of sensitive date_of_birth fields (replaced with computed masked_date fields). The computed field adds a random number of days (one to seven) to each current date. The pipeline already contains a $match stage with the filter masked_date > 01-Jan-2020. The runtime cannot optimise this to the top of the pipeline due to the dependency on a computed value. Nevertheless, you can manually add an extra $match stage at the top of the pipeline, with the filter date_of_birth > 25-Dec-2019. This new $match leverages an index and filters records seven days earlier than the existing $match, but the aggregation's final output is the same. The new $match may pass on a few more records than intended. However, later on, the pipeline applies the existing filter masked_date > 01-Jan-2020 that will naturally remove surviving surplus records before the pipeline completes.

Pipeline Match Summary

In summary, if you have a pipeline leveraging a $match stage and the explain plan shows this is not moving to the start of the pipeline, explore whether manually refactoring will help. If the $match filter depends on a computed value, examine if you can alter this or add an extra $match to yield a more efficient pipeline.

Expressions Explained

Summarising Aggregation Expressions

Expressions give aggregation pipelines their data manipulation power. However, they tend to be something that developers start using by just copying examples from the MongoDB Manual and then refactoring these without thinking enough about what they are. Proficiency in aggregation pipelines demands a deeper understanding of expressions.

Aggregation expressions come in one of three primary flavours:

-

Operators. Accessed as an object with a

$prefix followed by the operator function name. The "dollar-operator-name" is used as the main key for the object. Examples:{$arrayElemAt: ...},{$cond: ...},{$dateToString: ...} -

Field Paths. Accessed as a string with a

$prefix followed by the field's path in each record being processed. Examples:"$account.sortcode","$addresses.address.city" -

Variables. Accessed as a string with a

$$prefix followed by the fixed name and falling into three sub-categories:-

Context System Variables. With values coming from the system environment rather than each input record an aggregation stage is processing. Examples:

"$$NOW","$$CLUSTER_TIME" -

Marker Flag System Variables. To indicate desired behaviour to pass back to the aggregation runtime. Examples:

"$$ROOT","$$REMOVE","$$PRUNE" -

Bind User Variables. For storing values you declare with a

$letoperator (or with theletoption of a$lookupstage, orasoption of a$mapor$filterstage). Examples:"$$product_name_var","$$orderIdVal"

-

You can combine these three categories of aggregation expressions when operating on input records, enabling you to perform complex comparisons and transformations of data. To highlight this, the code snippet below is an excerpt from this book's Mask Sensitive Fields example, which combines all three expressions.

"customer_info": {"$cond": {

"if": {"$eq": ["$customer_info.category", "SENSITIVE"]},

"then": "$$REMOVE",

"else": "$customer_info",

}}

The pipeline retains an embedded sub-document (customer_info) in each resulting record unless a field in the original sub-document has a specific value (category=SENSITIVE). {$cond: ...} is one of the operator expressions used in the excerpt (a "conditional" operator expression which takes three arguments: if, then & else). {$eq: ...} is another operator expression (a "comparison" operator expression). "$$REMOVE" is a "marker flag" variable expression instructing the pipeline to exclude the field. Both "$customer_info.category" and "$customer_info" elements are field path expressions referencing each incoming record's fields.

What Do Expressions Produce?

As described above, an expression can be an Operator (e.g. {$concat: ...}), a Variable (e.g. "$$ROOT") or a Field Path (e.g. "$address"). In all these cases, an expression is just something that dynamically populates and returns a new JSON/BSON data type element, which can be one of:

- a Number (including integer, long, float, double, decimal128)

- a String (UTF-8)

- a Boolean

- a DateTime (UTC)

- an Array

- an Object

However, a specific expression can restrict you to returning just one or a few of these types. For example, the {$concat: ...} Operator, which combines multiple strings, can only produce a String data type (or null). The Variable "$$ROOT" can only return an Object which refers to the root document currently being processed in the pipeline stage.

A Field Path (e.g. "$address") is different and can return an element of any data type, depending on what the field refers to in the current input document. For example, suppose "$address" references a sub-document. In this case, it will return an Object. However, if it references a list of elements, it will return an Array. As a human, you can guess that the Field Path "$address" won't return a DateTime, but the aggregation runtime does not know this ahead of time. There could be even more dynamics at play. Due to MongoDB's flexible data model, "$address" could yield a different type for each record processed in a pipeline stage. The first record's address may be an Object sub-document with street name and city fields. The second record's address might represent the full address as a single String.

In summary, Field Paths and Bind User Variables are expressions that can return any JSON/BSON data type at runtime depending on their context. For the other kinds of expressions (Operators, Context System Variables and Marker Flag System Variables), the data type each can return is fixed to one or a set number of documented types. To establish the exact data type produced by these specific operators, you need to consult the Aggregation Pipeline Quick Reference documentation.